How Finalyse can help

Principles for effective Risk Data Aggregation, IT Infrastructure and Governance: turn regulatory obligation to operational and strategic benefits

How to maximize business value when implementing increasing regulatory-driven data management requirement?

Written by Sheta Goswami, Consultant and Vikas Dubey, Senior Consultant. Edited by Maria Nefrou, Managing Consultant and reviewed by Hugo Weitz, Managing Consultant.

Introduction

In the last decade, significant efforts have been made to enhance risk management practices in major banks related to data aggregation governance. The Basel Committee introduced BCBS 239 in 2013, aiming to establish a robust data management framework that improves internal and regulatory risk reporting and decision-making while reducing losses.

Although banks acknowledge the importance of BCBS 239 and have made progress, achieving full compliance remains challenging. None of the global systemically important banks have achieved complete compliance, leading to a prolonged implementation timeline. Compliance now extends to a wider range of banks, assessed based on progress and commitment.

BCBS 239 has become the leading standard, setting higher data management expectations for regulators and stakeholders. Its application covers various risk management processes, with banks determining the specific areas requiring compliance. Initially focusing on COREP and FINREP reports, the industry has expanded to include additional regulatory and internal reporting, making the BCBS 239 principles a “de facto” standard for essential business data.

To effectively implement BCBS 239, starting with a limited scope and gradually expanding the data management framework, is recommended, as well as taking a pragmatic approach in ensuring alignment with the principles and delivering the required reports. For that purpose, Finalyse has developed a data governance toolkit that seamlessly aligns with the regulatory framework, while offering a practical, hands-on approach to implement industry best practices, resulting in faster implementation. The Finalyse toolkit enables clients to adopt these practices efficiently, using as a starting point already a few steps down the process.

General topics

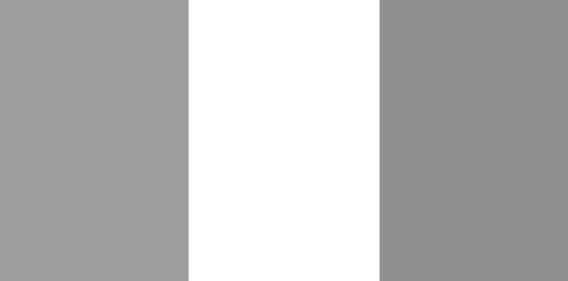

Finalyse acknowledges the imperative of investing in a centralised data management framework and effective data governance, and sees the following focus areas and approach when tackling data management:

Integrating the project lifecycle and recognizing the interdependence between Risk, IT, and Data Offices is a key element of the implementation approach, allowing to define clear deliverables for each iteration, while enabling efficient collaboration.

Smaller banks are learning from the mistakes of larger institutions and join the implementation of data governance to overcome administrative burdens, outdated data, and data quality challenges. To assist organisations in translating BCBS 239 principles into practical solutions, Finalyse offers a comprehensive toolbox for auditing data maturity and efficiently implementing tailored governance. Our toolkit is highly customisable, providing qualitative inputs for further quantitative analysis. It enables the evaluation of data management frameworks, compliance with regulatory standards, and identifies priority areas for focus. By addressing reconciliation issues and aligning data functions with technical aspects, our toolkit reduces implementation time and serves as a preliminary step before investing in automation.

Note that Finalyse has elaborated on its overall Data Management and BCBS 239 approach, on the following publications:

- Blog-Post: https://www.finalyse.com/blog/implementing-bcbs239

- Service Definition: https://www.finalyse.com/data-management-framework-implementation#c1866

A pragmatic approach to data Management Implementation

Implementing data governance for managing and leveraging on data effectively, can be a rather complex and lengthy process. Finalyse supports and promotes the importance of adopting a pragmatic approach and sets the basis for capturing the minimum requirements which all organisations in the sector should adhere to, irrespective of their size, complexity, and regulatory obligations.

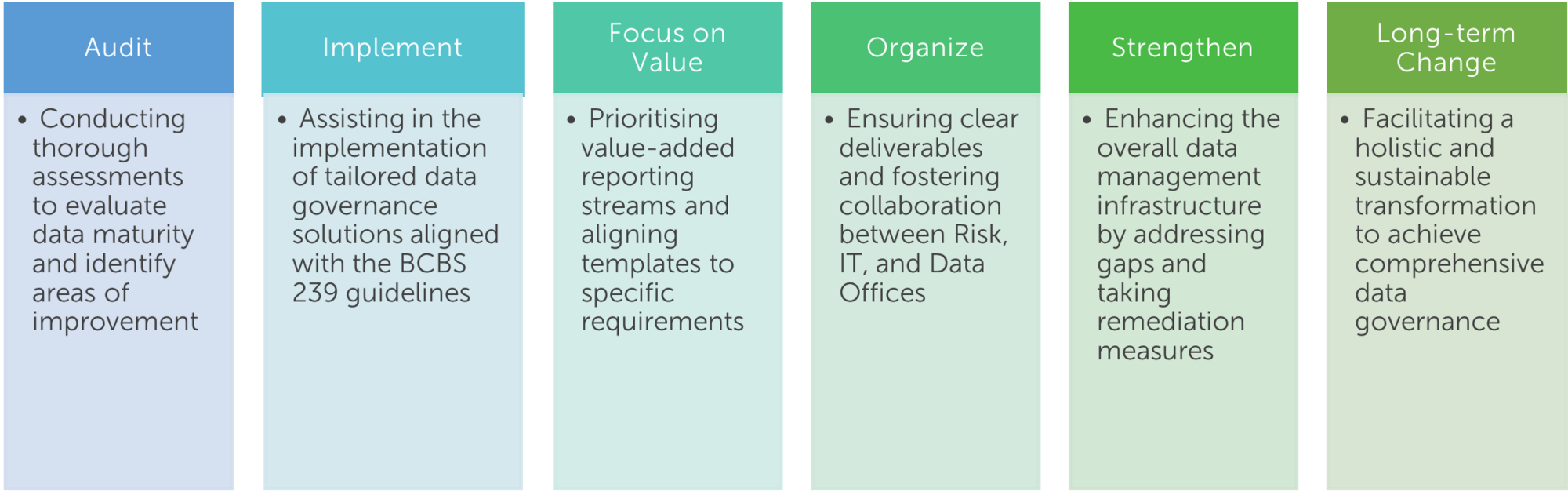

The Data Governance Toolkit offers a comprehensive solution to address challenges associated with implementing and maintaining robust data governance practices.

From establishing a governance framework to ensuring data quality, compliance, and security, the toolkit empowers organizations to overcome data governance hurdles and leverage their data as a strategic asset. By adopting the Data Governance Toolkit, organisations can achieve enhanced data governance maturity, drive informed decision-making, and establish a strong foundation for their data-driven initiatives.

Key features of Data Management Toolkit

Finalyse has consolidated its best practices and adopted a value-centric approach for BCBS239 compliance. As a result, six practical tools, processes, and templates have been developed, easily customisable to any organisation’s requirements.

Data Dictionary

The first feature of the toolkit comprises of the Data Dictionary template, which includes a standardised version of the business requirements. Data is categorised into seven attribute categories, such as individual, organisation, party basis data, party type, regulatory class, relation, and risk.

Each data attribute is described and can be linked to the corresponding business requirements, risk domain, and data warehouse location.

Through workshops, the business data owners may focus on defining priorities and reporting objectives and assess the necessity and priority of each attribute for meeting their report objectives. Priorities serve as a crucial input for creating an implementation plan that prioritises the attributes with the highest value. It also enables to link data attributes to the reporting streams they contribute to and, by doing so, focus on action plans, developments, automation and overall improving of the data quality to the reports prioritised in the BCBS 239 roadmaps.

Data Quality Control Inventory (DQCI)

The primary objective of this feature is to provide a clear representation of the existing controls, and document these effectively, by identifying key reporting standards and defining the controls' scope within the identified reporting framework.

Once the scope of a project or task is established, the relevant data shall be mapped in a process flow dictionary, which ensures that all data elements in scope are identified, understood and accurately documented. Mapping the data, includes capturing information about the specific data fields, their definitions, formats, sources, and any relevant attributes. This step helps in creating a comprehensive inventory of the data elements involved, promoting clarity and consistency in data management. It also contributes to creating a clear reference for data management, enables effective analysis and decision-making, and establishes the necessary controls to ensure data integrity and security.

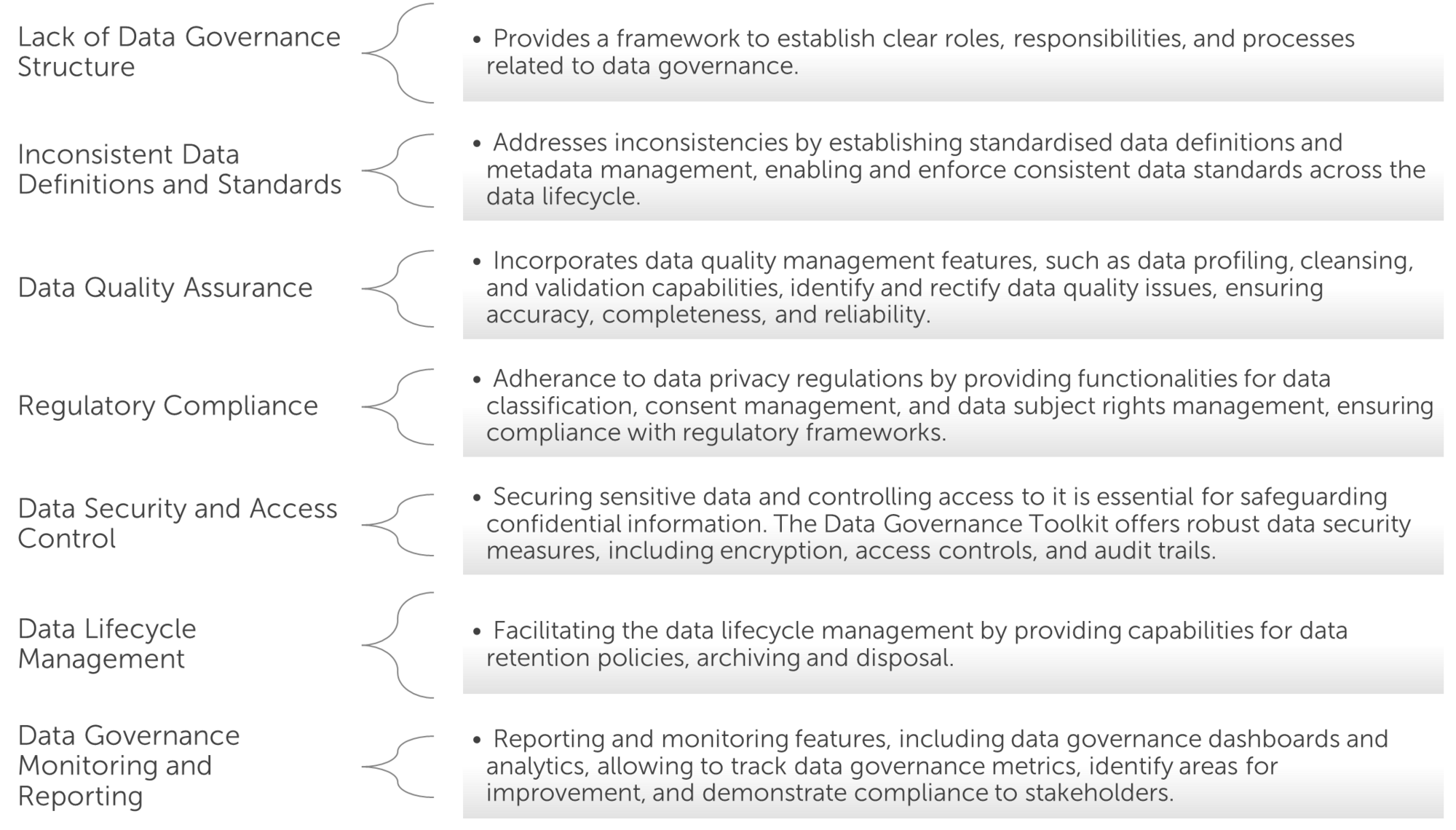

Next to data mapping, also the data dimensions are being identified. Data dimensions refer to the different aspects or categories that provide context to the data. This assists in understanding the various factors that influence the data and enables a better analysis and decision-making.

The dimensions are described below:

One of the essential components of this tool is the Data Quality Control Index (DQCI). This index comprises of columns that offer dropdown options to facilitate user interaction. Users can select the appropriate options from these dropdown lists, thereby streamlining the process of applying data quality controls.

Overall, this tool serves as a comprehensive guide to understanding and documenting the existing controls in relation to specific reporting standards. It enables the identification of data elements, dimensions, and associated controls, providing a structured approach to managing data quality in the reporting process.

Reporting Inventory

The reporting inventory plays a crucial role in the reporting process as it provides pertinent information, ensuring adherence to harmonised reporting templates. The proposed template follows a specific sequence to streamline the inventory creation:

Scope Definition: The first step involves defining the scope of reports encompassing Risk, Finance, and Regulatory aspects, while ensuring all relevant data is incorporated.

Assignment of Responsibilities: All reports may be assigned specific responsibilities, ensuring clear ownership and accountability for their accurate and timely completion.

Inventory Establishment: The inventory describes all the necessary information related to each report, including details such as report name, purpose, data sources, key stakeholders, and any specific requirements or guidelines.

Validation of Inventory: The created inventory must be validated by internal and external contributors and stakeholders, ensuring that all relevant parties have reviewed and confirmed accuracy and completeness of the inventory.

Process Definition: To maintain the effectiveness of the inventory, defining and establishing a process to ensure regular updates, is essential. This process outlines the steps, responsibilities, and frequency of updating the inventory to reflect any changes or additions accurately.

By following this sequence, the reporting inventory becomes a reliable and comprehensive resource that supports efficient reporting processes. It provides a clear overview of the reports, their associated responsibilities, and the necessary information, ensuring accurate and consistent reporting across the organization.

EUC Scoring Template

End-User Computing applications are widely used in organisations for various tasks, from financial modelling to data analysis, adding one more layer of complexity in effectively managing and governing data.

The proposed data governance tool offers a comprehensive EUC scoring template designed to evaluate the risks posed by EUCs, and consists of the following features:

Risk Identification: The template proposes the identification of EUCs within the organisation and categorisation based on criticality and complexity, while laying the foundation for understanding the potential risks associated with each application.

Risk Assessment: It enables organisations to assess various risk dimensions, including data integrity, operational risk, and compliance, providing a scoring framework to evaluate each risk dimension, and allowing for a comprehensive analysis of EUC risks.

Mitigation Strategies: Once risks are identified and assessed, the EUC scoring template assists in developing effective mitigation strategies, while guiding organisations in implementing control measures, such as improved documentation, version control, user access restrictions, and regular review processes.

Enhanced Data Governance: By evaluating and addressing EUC risks, organisations can strengthen their overall data governance framework, leading to increased data integrity, reliability, and compliance with regulatory requirements.

Improved Decision-Making: The template enables organisations to make more informed decisions by ensuring accuracy and reliability of EUC-generated data. With a clearer understanding of EUC risks, management can confidently rely on these applications for critical decision-making processes.

Cost and Time Savings: Proactively managing EUC risks helps minimise the potential for errors, data inconsistencies, and costly remediation efforts, by streamlining processes and reducing manual interventions.

Project Data Maturity Scoring

Project data maturity refers to the level of data management and governance practices within an organisation's project. It encompasses processes, tools, and methodologies used to collect, analyse, store, and maintain project data. Assessing project data maturity allows organisations to identify gaps and implement improvements that enhance data quality and the overall project management lifecycle. The proposed template features the following items:

Maturity Assessment: It assesses various dimensions of project data maturity, such as data governance, data quality, data integration, data security, and data lifecycle management, providing insights on the existing data management practices.

Scoring Framework: It provides a structured scoring framework for measuring the maturity level of each dimension, assign scores based on predefined criteria, identify areas for improvement, and prioritize the data management efforts accordingly.

Actionable Recommendations: Actionable recommendations and best practices to enhance data management enable organisations to implement strategies such as data governance frameworks, data quality controls, data integration standards, and data security measures.

Improved Data Quality: By assessing project data maturity, organizations can identify data quality gaps and implement measures to enhance data accuracy, completeness, and consistency. This ensures reliable and trustworthy project data, leading to informed decision-making.

Compliance and Risk Mitigation: This helps ensure compliance with data privacy regulations and mitigate data-related risks. By implementing data governance and security measures, organisations can protect sensitive project data, maintain regulatory compliance, and safeguard their reputation.

BCBS239 Assessment Questionnaire Template

To assist the process of assessing compliance with BCBS239, the Data Governance Toolkit also offers an Assessment Questionnaire Template. Specifically, the key features of which are listed below:

Comprehensive Coverage: The questionnaire covers the key principles outlined in BCBS239, addressing areas such as data governance, risk data aggregation capabilities, reporting processes, data quality management, and technology infrastructure.

Evaluation Framework: The template provides a structured framework to assess compliance with each BCBS239 principle. It includes a series of targeted questions that prompt organisations to evaluate their current practices and identify areas for improvement.

Actionable Recommendations: Based on the assessment results, the template offers actionable recommendations to enhance compliance with BCBS 239. These recommendations guide financial institutions in implementing necessary changes, such as establishing data governance committees, enhancing data quality controls, improving data lineage documentation, and strengthening reporting frameworks.

Regulatory Compliance: The toolkit helps assess adherence to BCBS 239 requirements, ensuring that regulatory expectations are met, and penalties and reputational damage are avoided, while demonstrating a commitment to sound risk data management.

Enhanced Risk Management: Evaluating compliance with BCBS 239 principles allows institutions to identify gaps in risk data aggregation and reporting. By implementing the toolkit's recommendations, organisations can enhance their risk management capabilities, leading to more informed decision-making and improved risk mitigation strategies.

Efficiency and Transparency: The toolkit promotes efficiency and transparency in risk data processes. It assists organisations in streamlining data governance practices, establishing standardised data definitions, and improving data quality. This, in turn, facilitates smoother operations and reliable reporting mechanisms.

Conclusion

BCBS 239 related governance should now be considered for implementing in a wider range of organisations, than systemic institutions. Smaller banks and late adopters can benefit from the extensive experience gathered along the years in multiple data governance projects and use this as a basis for implementing the minimum standards for data governance.

Summarising these insights, the Finalyse toolkit is offering hands-on best practices in order to start a new governance from scratch or to audit governance that has proven limited to deliver the desired results. The toolkit is highly customisable to the specificities of every different banks.

Finalyse’s recommendation lies on the value of ‘starting small’ and ‘grow steady’, identifying a reporting stream that should be analysed with priority, deploy, and adapt the toolkit for this specific stream. Further, gain insight on how to make it achieve the specific goals of the organisation it is applied to, then extend the scope on new reporting streams iteratively.

Applying this agile way of working we can make sure promised value expected from this governance is delivered as soon as possible for a small scope. Proportionating the effort to the value it creates to reports and data owners being a key factor of success for the implementation of a new data management framework.

Do not hesitate to request a free workshop to be introduced to the different features of toolbox and identify the parts and approach that would suit your governance goals.

Finalyse InsuranceFinalyse offers specialized consulting for insurance and pension sectors, focusing on risk management, actuarial modeling, and regulatory compliance. Their services include Solvency II support, IFRS 17 implementation, and climate risk assessments, ensuring robust frameworks and regulatory alignment for institutions. |

Our Insurance Services

Check out Finalyse Insurance services list that could help your business.

Our Insurance Leaders

Get to know the people behind our services, feel free to ask them any questions.

Client Cases

Read Finalyse client cases regarding our insurance service offer.

Insurance blog articles

Read Finalyse blog articles regarding our insurance service offer.

Trending Services

BMA Regulations

Designed to meet regulatory and strategic requirements of the Actuarial and Risk department

Solvency II

Designed to meet regulatory and strategic requirements of the Actuarial and Risk department.

Outsourced Function Services

Designed to provide cost-efficient and independent assurance to insurance and reinsurance undertakings

Finalyse BankingFinalyse leverages 35+ years of banking expertise to guide you through regulatory challenges with tailored risk solutions. |

Trending Services

AI Fairness Assessment

Designed to help your Risk Management (Validation/AI Team) department in complying with EU AI Act regulatory requirements

CRR3 Validation Toolkit

A tool for banks to validate the implementation of RWA calculations and be better prepared for CRR3 in 2025

FRTB

In 2025, FRTB will become the European norm for Pillar I market risk. Enhanced reporting requirements will also kick in at the start of the year. Are you on track?

Finalyse ValuationValuing complex products is both costly and demanding, requiring quality data, advanced models, and expert support. Finalyse Valuation Services are tailored to client needs, ensuring transparency and ongoing collaboration. Our experts analyse and reconcile counterparty prices to explain and document any differences. |

Trending Services

Independent valuation of OTC and structured products

Helping clients to reconcile price disputes

Value at Risk (VaR) Calculation Service

Save time reviewing the reports instead of producing them yourself

EMIR and SFTR Reporting Services

Helping institutions to cope with reporting-related requirements

CONSENSUS DATA

Be confident about your derivative values with holistic market data at hand

Finalyse PublicationsDiscover Finalyse writings, written for you by our experienced consultants, read whitepapers, our RegBrief and blog articles to stay ahead of the trends in the Banking, Insurance and Managed Services world |

Blog

Finalyse’s take on risk-mitigation techniques and the regulatory requirements that they address

Regulatory Brief

A regularly updated catalogue of key financial policy changes, focusing on risk management, reporting, governance, accounting, and trading

Materials

Read Finalyse whitepapers and research materials on trending subjects

Latest Blog Articles

Contents of a Recovery Plan: What European Insurers Can Learn From the Irish Experience (Part 2 of 2)

Contents of a Recovery Plan: What European Insurers Can Learn From the Irish Experience (Part 1 of 2)

Rethinking 'Risk-Free': Managing the Hidden Risks in Long- and Short-Term Insurance Liabilities

About FinalyseOur aim is to support our clients incorporating changes and innovations in valuation, risk and compliance. We share the ambition to contribute to a sustainable and resilient financial system. Facing these extraordinary challenges is what drives us every day. |

Finalyse CareersUnlock your potential with Finalyse: as risk management pioneers with over 35 years of experience, we provide advisory services and empower clients in making informed decisions. Our mission is to support them in adapting to changes and innovations, contributing to a sustainable and resilient financial system. |

Our Team

Get to know our diverse and multicultural teams, committed to bring new ideas

Why Finalyse

We combine growing fintech expertise, ownership, and a passion for tailored solutions to make a real impact

Career Path

Discover our three business lines and the expert teams delivering smart, reliable support