Implementation and Change Management Associated with Sophisticated Tools – A glimpse at QRM

Written by Maria Nefrou, Managing Consultant

This article discusses challenges and risks associated with the process of implementing changes on complex tools that use advanced databases, and proposes a high level change management process that supports and covers the needs of such implementations. The QRM Analytical Framework is used as a basis for elaborating on the suggested practices, based on our experience with the system, however, notions described therein may apply to various sophisticated tools of similar nature.

Introduction

Leveraging on QRM in ALM and Market Risk Context

QRM (Quantitative Risk Management) is a leading Asset & Liability Management (ALM) system widely used by Banks that are subject to high regulatory scrutiny from a market and liquidity risk perspective. Within the ALM and Market Risk context, the QRM Framework enables users to model complex assets, liabilities, and off-balance-sheet instruments. It provides pricing of instruments using pricing models such as Rate Models, but also offers the possibility to build Behavioural Models that capture (parameterized) prepayments, re-pricing and downgrade characteristics. Not only do the Institutions benefit from the modelling and calculation aspect of the tool, it also gives them the possibility to create customised reports that reflect the business requirements. Reports are generated and retrieved with the use of standard OLAP and Microsoft Reporting Services technologies, based on customizable risk measures built by the users. Information within the QRM Framework is processed on a transaction level, but can be also rolled up and aggregated over either a predefined set of hierarchies, or hierarchies specific to the institution, build by the user in the QRM tool using customised dimensions.

Robust change management process is required

Implementing Balance Sheet reporting solutions into the QRM Analytical Framework, with the purpose of maximising the aforementioned benefits, can be a challenging process. Institutions operating under closely monitored and rapidly evolving regulatory environment require capacity to adopt robust change management processes, adaptable to the systems employed across the organisation. The necessity becomes ever more crucial when implementing complex systems which often represent a ‘black box’ for their users and therefore add additional layer of complexity in the changing process. The QRM Analytical Framework undoubtedly falls into this category.

Limitations to using the QRM Analytical Framework

The proprietary nature in the system’s calculation mechanisms and underlying operating functions, coupled with a wide range of functionalities, provide a significant challenge to non-expert users as to understanding and controlling the system. Within organisations, QRM knowledge is usually limited to small groups and specific functions, who have become familiar with the system, following a lengthy (commonly measured in years) engagement and interaction with the Framework’s processes. QRM experts in the market are also few, given a strict policy of the company with regards to the assigned users. Even though QRM provides a good support to clients by assigning in-house experts, or via their well-updated communication platform, the processes are opaque which makes it more challenging to adjust the system due to new business or regulatory requirements.

The QRM Analytical Framework in a Nutshell

The QRM Analytical Framework is built on a Microsoft Windows platform. It uses Microsoft SQL Servers as its primary data store, and can source data from various source systems as it applies an Extract, Transformation and Load (ETL) technology. With the use of databases stored within the Framework, which carry the basis configuration (shell) that support the runs, the application loads and processes the selected data using a specified set of parameters. The results of the data processing are stored in OLAP cubes, and can also be extracted into Excel files maintaining a connection to the cubes.

Some of the basic elements and functions of the QRM Analytical Framework are:

- The actual QRM database which includes the configuration used by the process-runs,

- Market parameters (rates, curves, currencies, etc.)

- Assumptions (default & runoff functions, aggregation rules, etc.)

- Run parameters (analytical methods, etc.)

- Strategies and scenarios

- Dimensions

- Models (Rates Models, Behavioural Models, etc.)

- The data processing function comprising,

- Selection of portfolio, strategy/scenario combinations

- Planning run (data inputs and model configuration via analytics)

- Extraction of data onto the cubes

- Last but not least, the reports generation process which mainly includes,

- Selection of the desired portfolio that has been processed

- Selection of the report type in which the data will be presented

The execution of QRM runs (processing) can be done either directly via the QRM Framework application, or even more efficiently, via Visual Basis scripts that virtually connect to the application and trigger the required steps for running the required processes.

The QRM Change Implementation Process

Configuration

Implementing configuration changes on a QRM database should be straightforward task, with most workload falling on the development side (teams). While deploying these, however, a few challenges are identified for the institution’s change management process, given the high complexity of the tool with a large range of functionalities and numerous interlinks between different modules and features.

QRM aims at providing a maximum customisation of the database functionalities, which implies that most modules and features have a rather detailed structure. Deploying changes usually involves a set of manual and particularly detailed steps. This entails a large operational risk as it makes the databases sensitive to human error. Even though QRM has a good set of rules to prevent erroneous actions, there may still be limited control over intended vs unintended changes carried from one version to another. The lack of the option to instantly reverse actions should also be noted. Once the ‘Editor’ for each module is activated any (sensible) change can be applied, and once this is saved, it is permanently registered in the database. Reversing changes often requires the application of a new change that will remove the first.

However, It should be noted, that for a great number of configuration items, QRM allows their individual extraction to an external file i.e. Excel files, which enables the user to apply such changes outside the Framework and employ a more efficient version control. This may also contribute into the creation of change evidence for potential audits. When available, this option is preferable over direct changes into the Framework.

In addition, one should be mindful of the many interlinks between the different modules in the database. One change may trigger a series of changes in different places adding a risk of unintentionally and unexpectedly disrupting other processes served by the same database.

What happens when different development work is taking place for the same ‘live’ production database, though? Naturally, for a more efficient production, there may only exist one version of the database, which serves for a large range of functionalities over one, or even more processes. This may imply that different developers may work in parallel applying and testing changes and new features on their respective copy of the ‘live’ version of the QRM database. Merging changes is naturally not possible, therefore these need to be applied simultaneously, ensuring no overlaps in the configuration changes that may disrupt the different functionalities and processes.

Tips and Tricks

In order to mitigate such risks, an orderly change process must be in place and followed fastidiously:

- Documenting in detail the required changes, possibly grouped by interlinked functionalities, and set of steps to be followed for deploying these into a new clean version of the database - usually a copy of the ‘live’ version;

- Frequently backing up databases which are under development, preferably following the application of one change, or interlinked group of changes, and after these are properly tested and verified, based on the verified documented steps;

- Last but not least, it would be advisable that adjusted QRM databases are not passed directly from individual developers into the stream of deploying to production, but rather the final combined, tested and documented changes are applied altogether on a copy of the ‘live’ version, by an assigned team of experts.

Agile Models for Software Implementation

A good change management process is largely correlated with the clear definition of the requirements that trigger it, along with the ability to allow for transparency and traceability of changes. Deploying changes within the QRM Framework demands no less. In fact, when dealing with such a level of complexity, even this may not be enough.

Institutions should create a well-defined environment to accommodate the QRM Framework and should deploy strict procedures to protect and sustain the information that is put into production.

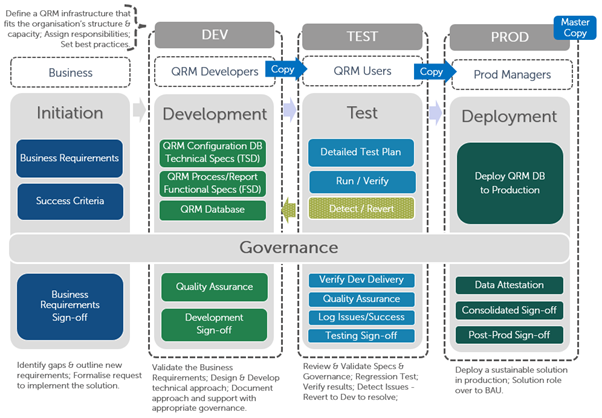

Starting from the Initiation phase, the business must detail all the end requirements the implemented solution aims to attain, through the Business Requirements Document (BRD), disclosing sufficient information for the QRM technical team to design and build the appropriate solution, but also provide the appropriate Success Criteria for an accurate quantification and qualification of the level of achievement.

Within the Development phase, developers are called to translate the requirements into a technical solution for QRM that must be detailed in the QRM Technical Specification Document (TSD). The QRM TSD must contain all the information associated with the basis configuration of the QRM Database, i.e. market parameters (rates, curves, currencies, etc.), assumptions, run parameters, strategies, scenarios, etc. Next, a detailed Functional Specifications Document (FSD) must be composed having as main focus what information is used and how downstream, preferably one document dedicated to each target report, to assist the sign-off process and maintenance. Last but not least, developers may perform the final build of the actual QRM Database that will be passed on to UAT, the process of which takes account the mitigation rules explained earlier on.

The Test Plan depends on the developed solutions and the level of severity in the configuration changes made in the database. In all cases, a detailed testing plan containing all the directly and indirectly affected features, must be put in place, to secure from the risk of unintended changes being deployed to Production, as explained earlier. Defects may be reverted to the development experts.

The Development as well as the Testing phase must be supported and controlled by a robust Quality Assurance process that records and advises upon the change process, while making available quality related evidence for a potential Audit on the new deployment.

At the end of the process the Deployment of the new solution to Production must follow a peer review of the precedent steps and a Consolidated Sign-off from a change management function, in order to secure confidence on the implemented change.

Testing

Thorough testing is time consuming and requires a good effort in order to test every single functionality of the QRM database every time a change is applied within the Framework. However, as explained earlier, the complexity of the tool coupled with a limited knowledge on the details and interconnections within modules, demand verification that the intended changes are being transferred to Production with no disruption on other processes.

From a processing perspective, best practices suggest that, even if the applied changes concern one single QRM process, all processes that use the same QRM configuration database need to be tested again. The setup of appropriate KPIs on run-time, capacity, completion and extraction of the end reports, is essential for an accurate and efficient quality control.

A quantified data testing is easily achieved when the QRM reports are extracted in Excel at the end of the process. These reports carry connections to the Microsoft SQL Servers’ respective OLAP cubes, which allow for a straightforward and user-friendly data screening, at a multidimensional level. Such connections can also be used by the business to create customised reports that serve specific templates, utilizing Excel cube functions that enable the extraction of data from OLAP cubes directly into Excel (i.e. CUBESET, etc.).

Concerning quality control of data made available to business via the Production cubes, it is important to assume a holistic approach when testing the dimensions built within the databases, whether these are actively used by the business at the time or not. Production data must be verified and qualified for downstream application, at all times. The utilisation of such tools over the years, while environments evolve, often results into accumulation of changes that cause residual data to become obsolete, while still accessible by the business. Time, resources and knowledge constraints may pose a challenge to organisations to maintain clean QRM databases in Production, but it is essential that they take the chance to do so. Testing of new QRM elements and features should be viewed as an opportunity for applying peer reviews to the system and the information it generates.

Conclusion

In an ever-growing and demanding regulatory environment, institutions seek to increase efficiency in time, effort and quality, while minimising operational risks associated with human error. Technological companies from within the industry come to the rescue, offering complex tools that promise to tackle such concerns by assimilating the burden of knowledge on the regulatory framework, methodologies and reports production.

However, challenges concerning mastering the knowledge of such tools, are also introduced. Small errors in the QRM databases due to poor understanding of the underlying functionalities and features, may significantly influence the output and alter information made available to internal and external parties.

Institutions may lack the time and capacity to adequately address these, but as they remain fundamentally responsible for information held in Production, and accountable of consequences when quality issues occur, it is highly important that they do so.

Maximising control over the information put into Production via such tools, by:

- investing and promoting in developing QRM knowledge on individual users through training and adequate supporting documentation,

- maintaining a strong, structured and sufficiently controlled change management process supported by thorough and clear documentation,

- building and employing good practices that support the involved activities,

- investing time and effort to apply a holistic approach on the impact changes have on the overall database, and assess the accumulation of changes across time,

- sustaining clean and well-structured QRM databases,

may be a quick answer to the “black box” challenge. Because anything that can go wrong, if overlooked, will go wrong.

The extensive experience held by Finalyse Risk Advisory and partners, in assisting our clients to build customised, efficient and comprehensive implementation roadmaps that best fit the institution’s internal and external requirements, while implementing the appropriate solutions into acquired systems, has proven to bring great value to our clients, and it is provided as a complementary service for the majority of our core regulatory compliance services.

Finalyse InsuranceFinalyse offers specialized consulting for insurance and pension sectors, focusing on risk management, actuarial modeling, and regulatory compliance. Their services include Solvency II support, IFRS 17 implementation, and climate risk assessments, ensuring robust frameworks and regulatory alignment for institutions. |

Our Insurance Services

Check out Finalyse Insurance services list that could help your business.

Our Insurance Leaders

Get to know the people behind our services, feel free to ask them any questions.

Client Cases

Read Finalyse client cases regarding our insurance service offer.

Insurance blog articles

Read Finalyse blog articles regarding our insurance service offer.

Trending Services

BMA Regulations

Designed to meet regulatory and strategic requirements of the Actuarial and Risk department

Solvency II

Designed to meet regulatory and strategic requirements of the Actuarial and Risk department.

Outsourced Function Services

Designed to provide cost-efficient and independent assurance to insurance and reinsurance undertakings

Finalyse BankingFinalyse leverages 35+ years of banking expertise to guide you through regulatory challenges with tailored risk solutions. |

Trending Services

AI Fairness Assessment

Designed to help your Risk Management (Validation/AI Team) department in complying with EU AI Act regulatory requirements

CRR3 Validation Toolkit

A tool for banks to validate the implementation of RWA calculations and be better prepared for CRR3 in 2025

FRTB

In 2025, FRTB will become the European norm for Pillar I market risk. Enhanced reporting requirements will also kick in at the start of the year. Are you on track?

Finalyse ValuationValuing complex products is both costly and demanding, requiring quality data, advanced models, and expert support. Finalyse Valuation Services are tailored to client needs, ensuring transparency and ongoing collaboration. Our experts analyse and reconcile counterparty prices to explain and document any differences. |

Trending Services

Independent valuation of OTC and structured products

Helping clients to reconcile price disputes

Value at Risk (VaR) Calculation Service

Save time reviewing the reports instead of producing them yourself

EMIR and SFTR Reporting Services

Helping institutions to cope with reporting-related requirements

CONSENSUS DATA

Be confident about your derivative values with holistic market data at hand

Finalyse PublicationsDiscover Finalyse writings, written for you by our experienced consultants, read whitepapers, our RegBrief and blog articles to stay ahead of the trends in the Banking, Insurance and Managed Services world |

Blog

Finalyse’s take on risk-mitigation techniques and the regulatory requirements that they address

Regulatory Brief

A regularly updated catalogue of key financial policy changes, focusing on risk management, reporting, governance, accounting, and trading

Materials

Read Finalyse whitepapers and research materials on trending subjects

Latest Blog Articles

Contents of a Recovery Plan: What European Insurers Can Learn From the Irish Experience (Part 2 of 2)

Contents of a Recovery Plan: What European Insurers Can Learn From the Irish Experience (Part 1 of 2)

Rethinking 'Risk-Free': Managing the Hidden Risks in Long- and Short-Term Insurance Liabilities

About FinalyseOur aim is to support our clients incorporating changes and innovations in valuation, risk and compliance. We share the ambition to contribute to a sustainable and resilient financial system. Facing these extraordinary challenges is what drives us every day. |

Finalyse CareersUnlock your potential with Finalyse: as risk management pioneers with over 35 years of experience, we provide advisory services and empower clients in making informed decisions. Our mission is to support them in adapting to changes and innovations, contributing to a sustainable and resilient financial system. |

Our Team

Get to know our diverse and multicultural teams, committed to bring new ideas

Why Finalyse

We combine growing fintech expertise, ownership, and a passion for tailored solutions to make a real impact

Career Path

Discover our three business lines and the expert teams delivering smart, reliable support